Qualitative Secondary Analysis – an introduction

Presenter(s): Kahryn Hughes

At its simplest, Qualitative Secondary Analysis (hereafter QSA) refers to the re-use of existing qualitative data generated for previous research studies, for new purposes (Bishop and Kuula-Luumi, 2017). There has been an international flourishing of data archives and repositories, with enhanced accessibility. Over the past ten years or so, the trend for international and UK funding councils, including UKRI, has been away from funding primary research and towards the reuse of existing data as far as possible. Despite this, there have been continuing debates around QSA which often include questions concerning the epistemological, contextual, and practical embeddedness of the original research studies (Irwin, 2013).

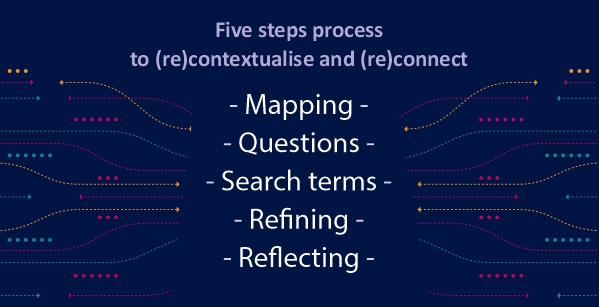

This resource shares strategies to (re)contextualise and (re)connect to QSA data to allow researchers to reuse data by examining and describing their relationships to the data:

- (re)contextualisation – the context of the data; how these data are of their time and place,

- (re)connection - how the data might be used now, beyond the ‘original’ contexts.

> Download five step process worksheet.

Questions for secondary analysts to consider

Epistemological questions commonly concern the specifics of data production throughout the original research, where the research teams have had the opportunity to decide on and shape the different kinds of data, and their content. This happens inevitably, through the theories and concerns of the originating team, their methodological approaches and research design, but also through their ongoing decision making and responsive engagement throughout a research study.

These decisions, namely the pragmatics of research, alongside the relationships between the research teams, the relationships teams have with their participants, gatekeepers and the broader networks through which the research is conducted, as well as the knowledge, evidence, policy and legislative landscapes situating the research, all contribute to the contextual specifics of the research.

A main concern is that qualitative research data are distinctive from other data because quantitative data are co-constructed between the researcher and researched (Mauthner et al, 1998; see also Hammersley, 2010). How, then, could these data be simply removed from these formative contexts and reused elsewhere? This was especially important in relation to how the contextual embeddedness of those data gave those data meaning, and how these meanings connected to the contexts of the lives of the participants producing them. In effect, how could data be removed from the times through and about which they were produced? How could data reusers, or secondary analysts, come to those data with the same contextual understandings?

This process of ‘getting to grips’ with the contextual embeddedness of data also raised additional questions of practicality, such as the need for the researcher to identify, access and familiarise themselves with large datasets and the samples from which they were drawn, and the consequent impact this has on researchers’ budgets and time. In other words, given the specificity of qualitative data, how could they be repurposed to answer new research questions, or even to answer similar questions by new researchers in new contexts? The idea that new researchers could simply stroll into an existing dataset and unproblematically reuse context, of temporal immanence of human dynamics and engagement were either erased or ignored.

Using an ‘investigative epistemology'

In 2007, in response to these arguments, Jennifer Mason advocated a shift beyond questions of whether we should re-use qualitative data, to questions of how we can. Advancing an ‘investigative epistemology’, Mason’s intervention paved the way for greater innovation and creativity in methods of qualitative data reuse (ibid, 2007: 2) including ‘energetically and creatively seeking out a range of data sources to answer pressing research questions in quite distinctive ways’.

Niamh Moore (2007) pointed out that for analysis to become ‘secondary’, data must become ‘secondary’ too. She argued that to conceive of ‘pre-existing data’ is to be blind to how data are co-produced in new contexts (ibid.). She proposed instead that (re)using data involves primary analysis of a different order of data (Moore, ibid.; see also Henderson et al., 2006; Hughes et al., 2021). Here, if we indeed accept that data are co-produced, the challenge for QSA researchers is to develop strategies for the comprehensive capture of how this occurs (Hughes et al., 2021). This involves researchers reflecting on the ‘embedded contexts’ of both their own and the previous study (Irwin et al., 2012) to ‘make sense’ of what those data are and how their ‘usefulness’ may be understood. Reflexive engagement is required concerning how we may bring differently constituted datasets into analytic conversation and alignment (Irwin, 2013). In other words, recontextualising data/sets involves recasting them as theoretical objects, identifying not only how they were produced, but how they may be repurposed for new questions and research aims (see also Tarrant, 2017; Tarrant and Hughes, 2019; Hughes and Tarrant, 2020a; Hughes et al., 2021).

Qualitative Secondary Analysis: (re)contextualisation and (re)connection

QSA can be seen as a process wherein researchers seek to apprehend data of different orders. We use the language of Qualitative Secondary Analysis to emphasise the different forms of ‘remove’ secondary analysts have from the original research contexts, as well as their ‘proximity’ to those data, including any connections such proximity may entail.

These forms of ‘remove’ include temporal, where researchers must account for the time passed since the original research; epistemological, namely the different questions and attendant theoretical concerns with which researchers are approaching existing datasets, and relational, where those relationships do not only concern those within the new research, but also the broader relationships contextualising that research.

To think through these various forms of remove, in that process of recasting research data as theoretical objects, we propose strategies of (re)contextualisation and (re)connection, that allow researchers to examine and describe their relationships to the data.

(Re)Contextualisation

The (re)contextualisation of data involves critical engagement with the contexts of the original studies. Questions to support such critical engagement include:

- How are these data of their time and place?

- How might these data speak both to and about the social contexts through which they were produced?

- How far can these data be used to speak beyond their original contexts, and for what purposes?

- What are the limits to re/apprehending these data and using them as a different form or order of evidence?

- How far do the contextual specifics we have identified limit the claims we can make based on them?

In effect, these questions help us to work out how these data are of their time and place, how they can be used to tell about the social world, and how far they can be reused.

(Re)Connection

The (re)connection of data emphases how these data may be used to speak beyond the contexts of their becoming. Questions that allow researchers to develop a case for reuse include:

- What are our connections to the data and the contexts of their production?

- How might these data be re/used to build a new study and/or generate new findings?

- How might the datasets need to be developed, extended, augmented and/or recast to support and develop new analyses?

- How might ‘distance’ (temporal, relational, theoretical) from the contexts of the original production of these data be analytically leveraged?

- What new ‘freedoms to tell’ are made possible by remove from the contexts of data production?

These strategies serve to overcome simplistic binaries between primary and secondary analysts, which are often invoked to imply a hierarchy of authentic connection between researchers and datasets. Primary analysts, those involved in the formative contexts of research studies are often also credited with having special claims on those data. We wouldn’t deny this. However, so too do secondary analysts, as Susie Weller points out, in the careful and painstaking process of familiarisation with the data researchers develop new relationships with data, and new emotional connections with once unfamiliar participants.

Conclusion

We therefore begin with questions of how we determine whether and how data generated can be used as evidence to inform on the social world (Hughes et al, 2020). Temporal and epistemic ‘distance’ from original study contexts stop being inevitable analytic deficits, and instead offer opportunities for new insights not always available to researchers proximal to the formative contexts of research.

The language of QSA is one infused with temporal modes of epistemological reflexivity. Seeing data with a new temporal perspective can enhance understandings of social processes (Duncan, 2012; Hughes et al, 2021) and provide fruitful ground for exciting and innovative research generating new findings and insights.

About the author

Kahryn is an internationally recognised scholar in the field of qualitative secondary analysis, and lead editor of 'Qualitative Secondary Analysis' (SAGE), for which she has also developed innovative methods training. As a Senior Fellow of the National Centre for Research Methods (NCRM), she is responsible for commissioning Qualitative Longitudinal and Qualitative Secondary Analysis research methods training.

- Published on: 26 September 2023

- Event hosted by: Leeds University

- Keywords: Qualitative Secondary Analysis |

- To cite this resource:

Kahryn Hughes. (2023). Qualitative Secondary Analysis – an introduction. National Centre for Research Methods online learning resource. Available at https://www.ncrm.ac.uk/resources/online/all/?id=20818 [accessed: 20 December 2025]

⌃BACK TO TOP