A person using a mobile phone while sat at a laptop.

A person using a mobile phone while sat at a laptop.The research community is currently fortunate to have a large number of high-quality web survey software packages. For example, Capterra (sourced 19 December 2021), shows 553 packages, 311 of which have been rated four or five stars. When selecting which package to use, my two main criteria are that the software has ‘skip logic’ and an ‘optimised design’ for mobile phones. The Capterra website shows that 159 packages have both of these attributes.

However, despite all this choice and the array of functions these packages offer, users of web survey software still need to know about questionnaire design. For example, although it is popular for software packages to include ‘question libraries / templates’, not all of these examples are optimal or even desirable. In one package, a template question had answer categories with number ranges that overlapped. In another, the answer scale was neither bipolar nor unipolar and in a third package, a semantic differential scale was set up with cryptic headers when no headers were needed.

Another consideration is that web software packages often have background templates. Some of these are inappropriate because they make it hard to read the survey questions (for instance, a background of bright purple and shocking pink or ones with crowded images).

With web survey packages, more question formats are available than in paper questionnaires. It may be tempting to use these formats because they are at hand, but just because it is technically possible to do these things does not mean they should be used. For example, would you or your respondents want to fill in a running tally task that must equal 100 or a question that has a matrix of 50 dropdown boxes?

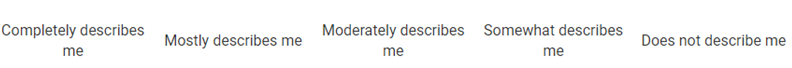

A problem can also occur with some packages when answer categories don’t always display in an expected way on the respondent’s screen (even for computers). For example, displays can look like the first example below, when we want the second one.

Example one:

Example two:

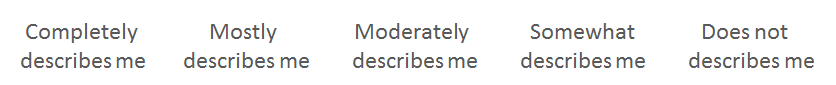

Another issue arises with answer categories that wrap to create double or triple banks. Such formatting was discovered to be problematic back in 2004 by Christian and Dillman (see below). However, these are still prevalent in some packages.

Example three:

The matters discussed could make respondents less willing and able to do the questionnaire tasks we present to them, thus undermining the effectiveness of our survey. If respondents experience even tiny bits of confusion or frustration, it is all too easy to leave the survey question blank or break-off and leave the survey itself. As early as 1993 (with paper self-completion), Dillman, Sinclair and Clark showed the importance of a ‘respondent friendly’ design with clear visual layout. This finding has been replicated and extended by Dillman and his colleagues over numerous articles and all the editions of their books (1978, 2000, 2007, 2009 and 2014).

Aside from the software, there are important questionnaire decisions to be made. And, of course, more knowledge leads to better decisions. For example, it is useful to know that several popular question types are problematic (these include agree/disagree, tick all that apply and the net promoter scale). It is also useful to understand all of the questionnaire design concerns and possible mode effects of moving from interviewer-administered surveys to web surveys and push-to-web.

If these topics pique your interest, you can find out more in my live online course, Questionnaire Design for Mixed-Mode, Web and Mobile Web Surveys, which I am running with the NCRM on 1-3 March 2022.

Sign up for the course Questionnaire Design for Mixed-Mode, Web and Mobile Web Surveys